- Item 1

- Item 2

- Item 3

- Item 4

GPT AI Enables Scientists to Passively Decode Thoughts in Groundbreaking Study

In a groundbreaking study, scientists employ a ChatGPT-like AI model to passively decode human thoughts with unprecedented accuracy, unlocking new potential in brain imaging and raising privacy concerns.

Scientists have achieved a startling breakthrough utilizing a GPT LLM to decipher human thoughts. Illustration: Artisana

🧠 Stay Ahead of the Curve

Scientists used a ChatGPT-like AI to passively decode human thoughts with up to 82% accuracy, a startling high level of accuracy.

The breakthrough paves the way for potential applications in neuroscience, communication, and human-machine interfaces.

This advancement raises concerns about mental privacy, emphasizing the need for policies to protect individuals from potential misuse as this technology advances.

May 01, 2023

A team of scientists has made a groundbreaking discovery by employing a Generative Pre-trained Transformer (GPT) AI model similar to ChatGPT to reconstruct human thoughts with up to 82% accuracy from functional MRI (fMRI) recordings. This unprecedented level of accuracy in decoding human thoughts from non-invasive signals paves the way for a myriad of scientific opportunities and potential future applications, the researchers say.

The Study and Methodology

Published in Nature Neuroscience, researchers from the University of Texas at Austin used fMRI to gather 16 hours of brain recordings from three human subjects as they listened to narrative stories. The team analyzed these recordings to identify the specific neural stimuli that corresponded to individual words.

Decoding words from non-invasive recordings has long been a challenge due to fMRI's high spatial resolution but low temporal resolution. Although fMRI images are of high quality, a single thought can persist in the brain's signals for up to 10 seconds, causing the recordings to capture the combined signals of approximately 20 English words spoken at a typical pace.

Before the advent of GPT Large Language Models (LLMs), this task was nearly insurmountable for scientists. Non-invasive techniques could only identify a few specific words that a human subject was thinking. However, by utilizing a custom-trained GPT LLM, the researchers successfully created a powerful tool for continuous decoding, as there are far more words to decode than brain images available – exactly where the LLM has superpowers.

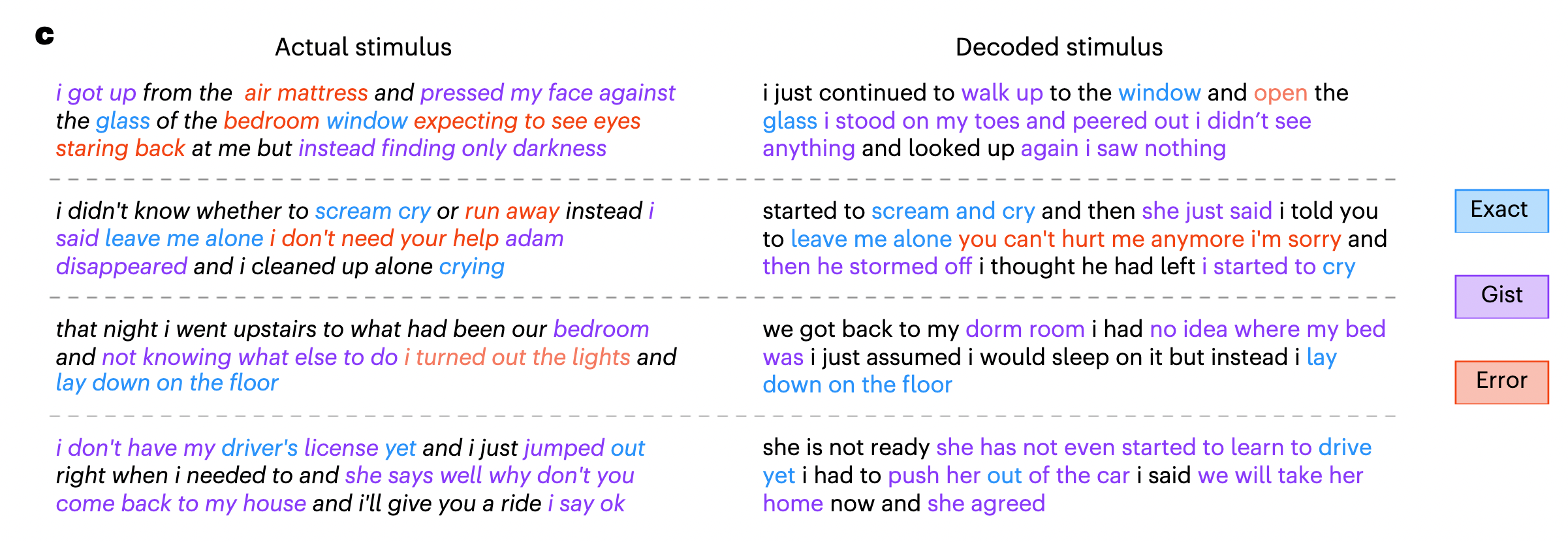

The GPT model generated intelligible word sequences from perceived speech, imagined speech, and even silent videos with remarkable accuracy:

Perceived speech (subjects listened to a recording): 72–82% decoding accuracy.

Imagined speech (subjects mentally narrated a one-minute story): 41–74% accuracy.

Silent movies (subjects viewed soundless Pixar movie clips): 21–45% accuracy in decoding the subject's interpretation of the movie.

The AI model could decipher both the meaning of stimuli and specific words the subjects thought, ranging from phrases like "lay down on the floor" to "leave me alone" and "scream and cry.

Privacy Concerns Surrounding Thought Decoding

The prospect of decoding human thoughts raises questions about mental privacy. Addressing this concern, the research team conducted an additional study in which decoders trained on data from other subjects were used to decode the thoughts of new subjects.

The researchers found that "decoders trained on cross-subject data performed barely above chance," emphasizing the importance of using a subject's own brain recordings for accurate AI model training. Moreover, subjects were able to resist decoding efforts by employing techniques such as counting to seven, listing farm animals, or narrating an entirely different story, all of which significantly decreased decoding accuracy.

Nevertheless, the scientists acknowledge that future decoders might overcome these limitations, and inaccurate decoder predictions could potentially be misused for malicious purposes, much like lie detectors.

A brave new world is arriving, and “for these and other unforeseen reasons,” the researchers concluded, “it is critical to raise awareness of the risks of brain decoding technology and enact policies that protect each person’s mental privacy.”

Research

In Largest-Ever Turing Test, 1.5 Million Humans Guess Little Better Than ChanceJune 09, 2023

News

Leaked Google Memo Claiming “We Have No Moat, and Neither Does OpenAI” Shakes the AI WorldMay 05, 2023

Research

GPT-4 Outperforms Elite Crowdworkers, Saving Researchers $500,000 and 20,000 hoursApril 11, 2023

Research

Generative Agents: Stanford's Groundbreaking AI Study Simulates Authentic Human BehaviorApril 10, 2023

Culture

As Online Users Increasingly Jailbreak ChatGPT in Creative Ways, Risks Abound for OpenAIMarch 27, 2023