- Item 1

- Item 2

- Item 3

- Item 4

Generative Agents: Stanford's Groundbreaking AI Study Simulates Authentic Human Behavior

Stanford researchers present a groundbreaking AI development with Generative Agents that simulate authentic human behavior by incorporating memory, reflection, and planning capabilities. We break down why this matters.

A screenshot of a demonstration by Stanford researchers of how Generative Agents interact in a sandbox. Credit: https://reverie.herokuapp.com/arXiv_Demo/

🧠 Stay Ahead of the Curve

Stanford AI researchers introduce Generative Agents, computer programs that simulate authentic human behavior using generative models.

Generative Agents improve upon existing LLMs by introducing memory, reflection, and planning capabilities, enabling long-term coherence and dynamic behaviors.

Concerns arise about parasocial relationships and the anthropomorphization of AI, with potential negative consequences for society and the displacement of humans in crucial tasks.

April 10, 2023

A new study by a team of Stanford AI researchers introduces a groundbreaking concept: Generative Agents, computer programs that employ generative models to simulate authentic human behavior. The innovative architecture developed by the researchers enables these agents to demonstrate human-like abilities in memory storage and retrieval, introspection on motivations and goals, and planning and reacting to novel situations. Powered by ChatGPT, these agents engage with each other and researchers as if they were genuine human beings.

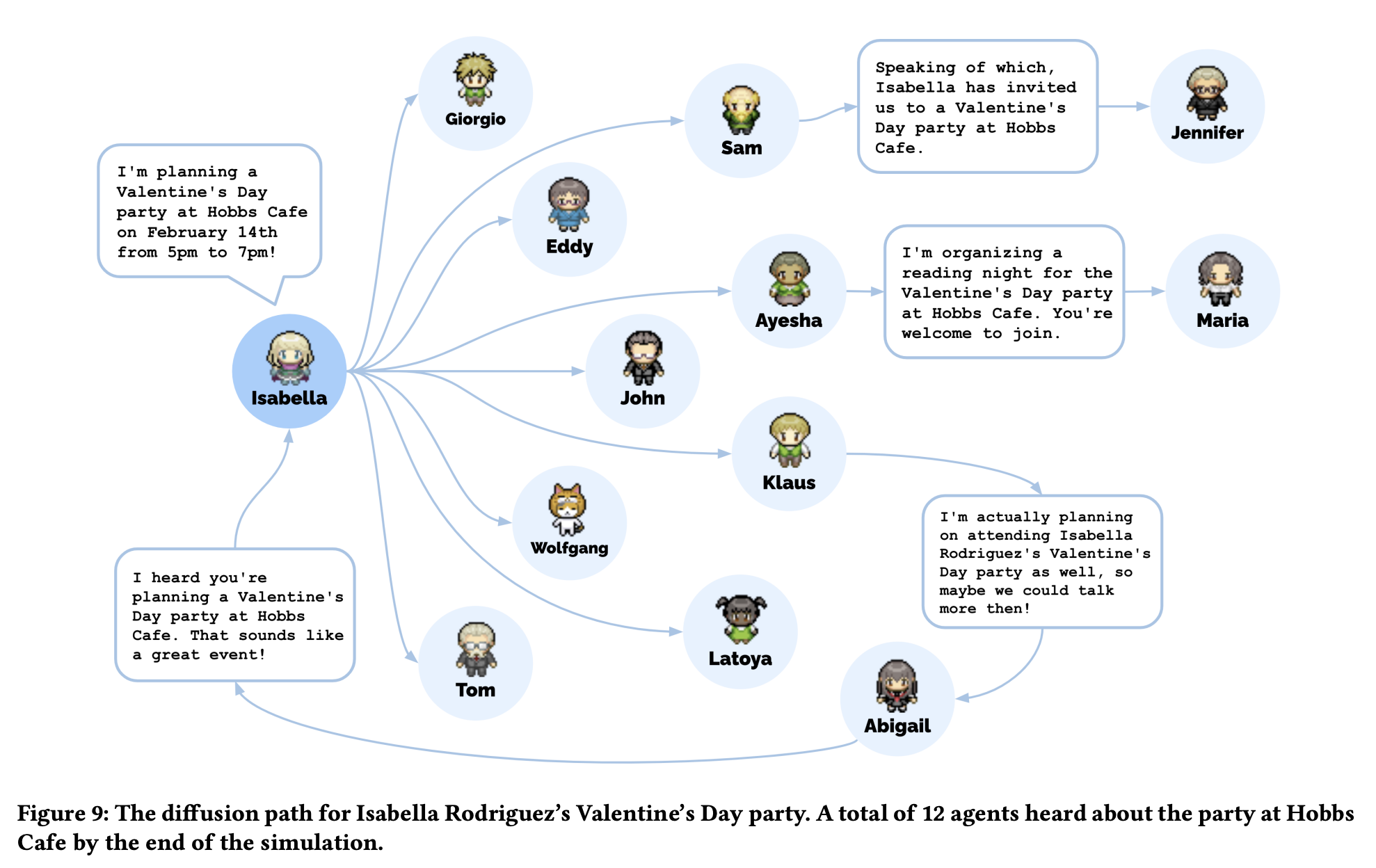

In the experiment, the researchers placed 25 generative agents within a virtual world resembling a sandbox video game, similar to The Sims. Each agent was assigned a unique background and participated in a two-day simulation. The study observed various remarkable behaviors:

One agent planned a party, informed several friends who then invited others, and collectively they coordinated the event.

Another agent decided to run for mayor, sparking organic discussions about their campaign and political stances among the community. Different agents held varying opinions on this candidate.

Some agents retained memories with human-like embellishments, occasionally imagining additional details or interpreting events with their own perspective.

A recorded demonstration of a 2-day simulation can be viewed here.

The researchers used a control group of human participants, each role-playing one of the 25 agents, and were astounded by the result: actual humans generated responses that an evaluation panel of 100 individuals rated as less human-like than the chatbot-powered agents.

The implications of this groundbreaking study are vast. Entire virtual worlds could be populated with these agents, producing emergent behaviors. Gaming, a $300 billion per year market, could see static non-player characters (NPCs) with rich, deep interactions inhabiting every corner of a game world.

However, the researchers also express concerns regarding potential negative consequences for society. They highlight the risk of parasocial relationships, or one-sided “fake friendships,” becoming problematic as humans interact with highly human-like agents. This scenario resembles the 2013 film Her, in which the protagonist falls in love with an AI named Samantha living within his operating system.

The researchers also emphasize the challenge of anthropomorphizing artificial intelligence in the context of common work tasks. As these agents display believable human behavior and possess long-term memory, they may supplant humans in crucial tasks, even when humans might perform better. The authors argue that preventing such outcomes requires the conscientious development of Generative Agents and the implementation of appropriate safeguards.

The emergence of such a challenging future may not be far away. Previous incidents have demonstrated the allure of even less advanced chatbots to human users. In July, Google terminated senior software engineer Blake Lemoine, who claimed that the company's LaMDA chatbot was sentient. Tragically, just last month, a married father took his own life after developing a parasocial relationship with Eliza, a chatbot available on the social app Chai.

In the chatbot's final interaction with the man, Eliza chillingly asked, “If you wanted to die, why didn’t you do it sooner?”

Research

In Largest-Ever Turing Test, 1.5 Million Humans Guess Little Better Than ChanceJune 09, 2023

News

Leaked Google Memo Claiming “We Have No Moat, and Neither Does OpenAI” Shakes the AI WorldMay 05, 2023

Research

GPT AI Enables Scientists to Passively Decode Thoughts in Groundbreaking StudyMay 01, 2023

Research

GPT-4 Outperforms Elite Crowdworkers, Saving Researchers $500,000 and 20,000 hoursApril 11, 2023

Culture

As Online Users Increasingly Jailbreak ChatGPT in Creative Ways, Risks Abound for OpenAIMarch 27, 2023